Introduction

As AI becomes more accurate and available, it’s being adopted by almost every company looking to use data in order to train algorithms, rather than trying to write them in an algorithmic way. The demand for data and labeled data is ever increasing due to the nature of AI models that learn from examples and adapt to the ever-changing nature of data and the requirements of their domains. While data collection methods can yield great amounts of data, it has to be cleaned, classified, and annotated by human labelers in order to achieve high accuracy in a process that is often expensive, time-consuming, and hard to scale.

The Market Standard

The standard for most labeling companies is more or less the same within this industry. It traditionally involves a general training stage where workers are trained on how to use advanced tools for data annotation of all types, followed by domain-specific training sessions for onboarding each new dataset. This results in a tedious and labor-intensive job.

After these long stages (some companies take up to 2 weeks!), the labelers work and are sampled periodically for quality. The three main problems with this are hiring, training, and scaling (up and down) a large human crowd.

Concept

A Darwinistic Approach to Data labeling

Similar to human evolution, tasq.ai has evolved into a unique Darwinist approach by incorporating the “survival of the fittest” methodology to data labeling.

Just as with sports and gaming, some of us are more talented than others, and while putting the ball in the basket might be trivial to some, it can take years of training for others. We believe, and have proven, that some people, when faced with labeling tasks, are naturally better than others. By running multiple tests on huge numbers of users from mobile and gaming platforms, we can leverage the data of those who are fit enough to “survive” and identify the DNA of the best annotators.

Micro-tasks as Genes for Data Labelling

Similar to biology, in order to understand the genetic makeup of an organism, you need to sequence its micro basic building blocks and understand the genes they form. To better understand the capabilities of annotators, we need to break them down into the smallest possible building blocks in order to rank them separately. As a result, we’ve built our entire labeling process on the basis of workflows made up of microtasks. Each task is simple to perform and test and usually pertains to a small amount of data. Examples of micro-tasks can be the detection of simple objects, classification of images, or bounded objects.

By assigning users to different types of tasks and use cases, we measure their success rates and better understand their “genetic” makeup. In turn, we translate the data collected and build models around the DNA of labelers.

Splitting the annotation process to the workflow of small tasks allows us to uniquely assign even the most complex tasks to everyday/casual gamers. Since each one of them plays only a small part in the process, the amount of training is reduced from days to a matter of minutes.

Method

Consensus – Every Step of The Way

While the scale is a great advantage gained by the ability to reach a huge number of users, the main disadvantage is lack of consistency; a factor that is critical in the creation of high-quality datasets. By splitting the work on the micro-task level to collect multiple judgments from different users every step of the way, we can reach a consensus at a granular level and detect labeling issues without running the manual process of QA. Once detected, the system automatically requests more judgments until consensus is reached. Through micro-tasks, merging these judgments is carried out in a unique manner for every kind of task and for each different use case; for example, the merging of yes/no answers is different from the merging of polygons made by different users.

Using Ads to Build Datasets

While ads are universally used to drive sales through conversions, we use them to build high-quality datasets at scale. By splitting complex annotation workflows into simple micro-tasks, we can package them in gamified ad units such as playable ads and take advantage of the huge scale it offers us. Our ad units are built in HTML5 and can run on any device from smartphones to tablets and PCs. Based on the complexity and size of the images, we are able to target different devices to complete these tasks.

Validation of Production Models

While some companies are at the development stage, others are already using models in production. In order to ensure the correctness of predictions and prevent model drift over time, we have designed a process to not only build datasets but also validate them. As a part of this process, we receive annotated datasets and by implementing a different set of micro-tasks, ask users to point out data issues that can be later used to understand the model’s limitations and weak points, thus helping data teams reduce costs by focusing their annotation and data selection efforts.

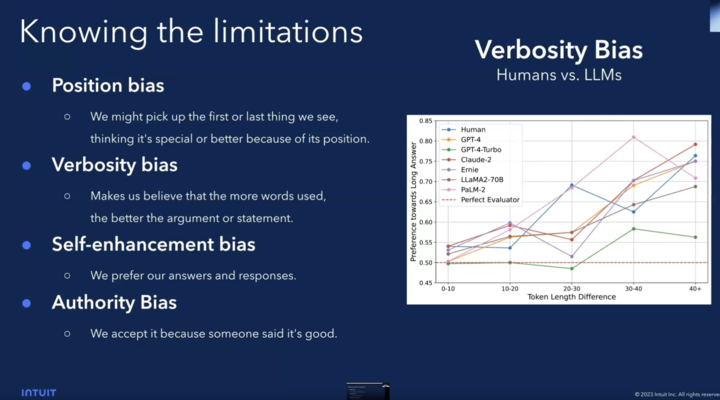

Fairness & Preventing Bias in AI

One of the major issues faced by AI developers is bias. The fact that models are trained by examples means that their predictions will be based only on a subjective point of view. While employing expert and consistent annotators can help models to converge faster, it also leads to unwanted bias especially when collecting judgments on opinionated or trending data. By using a multinational and diverse crowd, we reach consensus at a higher level of certainty and collect votes from people of different demographics and qualities.

Disrupting Internet Economies

Tasq as an Alternative Currency

Streaming content providers such as Netflix and Spotify charge users for their services, however, they are always bound to the banking system and credit card companies. Tasq offers an alternative payment method based on work done by annotators to unlock content from such services by using a smartphone regardless of the country they live in or whether or not they have a bank account or a credit card.

Tasq Redefines How Publishers Measure Lifetime Value (LTV)

The nature of ads is to drive traffic to other pages or apps and convert. A conversion is counted when a user has made a purchase, meaning that the chances for another conversion decrease significantly, requiring the publisher to drive new traffic in order to increase sales. As a result, user LTV decreases with the number of ads they see.

Tasq Ad units work in the opposite way; the more ads users see, the more trained they become. As a result, conversion rates increase with time, bringing more value to the publisher while at the same time, reducing the cost of buying new traffic.