Unveiling the Best GenAI Model for Retail: A Comparative Study Using Tasq.ai’s Eval Genie

Tasq.ai’s Eval Genie offers a comprehensive comparative study to guide retailers in making informed decisions.

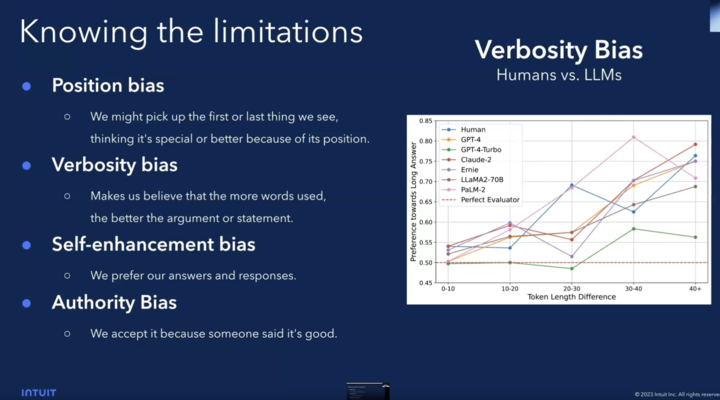

The 57% Hallucination Rate in LLMs: A Call for Better AI Evaluation

The 57% Hallucination Rate in LLMs: A Call for Better AI Evaluation Author: Max Milititski Introduction Large Language Models (LLMs) are rapidly evolving, pushing the boundaries of what AI can achieve. However, the traditional methods used to evaluate their capabilities are struggling to keep pace with this rapid advancement. Here’s why traditional LLM evaluation methods […]

Quantifying and Improving Model Outputs and Fine-Tuning with Tasq.ai

In a recent webinar, we showed how Tasq.ai’s LLM evaluation analysis led to a 71% improvement in results. In the dynamic world of AI, where every algorithmic breakthrough propels us into the future, Tasq.ai, in collaboration with Iguazio (now part of McKinsey), has set a new precedent in the realm of Machine Learning Operations (MLOps). […]

Why Human Guidance is Essential for the Success of Your Ecommerce Business: A Comprehensive Guide

In the bustling world of ecommerce, where every click, view, and purchase leave a digital footprint, Data is incredibly important. From understanding consumer behavior to optimizing marketing strategies, data holds the key to unlocking growth and staying ahead of the competition. However, in its raw form, data is often complex, unstructured, and challenging for machines […]

LLM Wars 1: Angry Tweet Rewrite Mistral vs. ChatGPT

It’s the great LLM Wars! Can Mistral AI as an open-source challenger finally take on the mighty #ChatGPT? We used Tasq.ai’s global Decentralized Human Guidance workers (Tasqers) to find out.

Data Split: Training, Validation, and Test Sets

Read more about importance of proper data splitting in machine learning to avoid models being inaccurately trained on a narro

Unleashing the Power of LLM Fine-Tuning

Explore LLM fine-tuning, with a specific focus on leveraging human guidance in LLM fine-tuning to outmaneuver competitors and some text here

How Does Polygon Annotation for Computer Vision Image Recognition Work?

Image and video annotation can be difficult if we have a limited amount of tools. For example, you cannot use bounding box when some text

Image Quality Assessment

The quality of a machine learning model in supervised machine learning is directly correlated with the quality of the data us…

AI Ethics – The Hope and the Worry

To ensure AI’s future is responsible, we must ask off-putting ethical questions. In this article, we aim to introduce …