AI holds huge potential for facilitation, but it also supplements negative outcomes if data scientists don’t recognize biases in datasets and correct them before model training processing. Incautious data handling leads to damaged and worthless data output (Garbage in – Garbage out).

As AI technology is moving increasingly toward greater integration across all aspects of life, biases are more likely to occur through the complex systems while at the same time, processes of identification and prevention are far slower.

But first, let’s define Bias in a valuable and easily understandable way.

Bias could be defined as an anomaly in the output of machine learning algorithms, caused by prejudiced assumptions during the process of algorithm development or in the training data. We can separate them into 2 types:

- Bias caused by invaluable data– Uncompleted data involved in the training data can cause bias. Uncompleted data could be defined as damaged, insufficiently processed data. Including that kind of data in the machine learning process undeniably leads to bad data output and time waste.

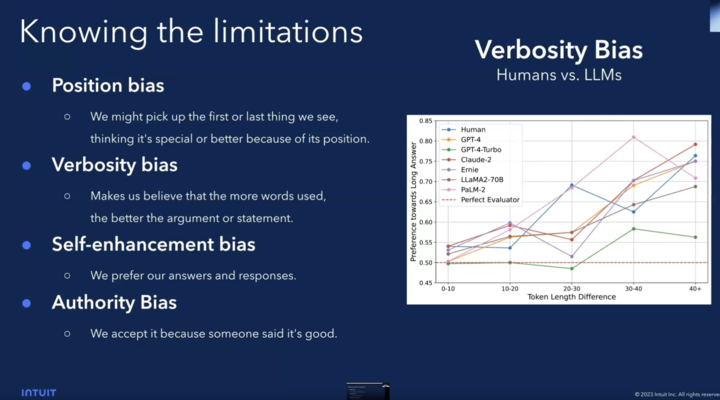

- Human-caused biases– Biases caused by unconscious errors in the human thinking process, developed during the brain’s attempt to simplify information processing about the subject. Keep in mind that creators of biased data are humans and human-made algorithms are the main checkers in the process of identifying and removing biases as well.

A representative example could be Amazon’s machine learning-based recruiting system which showed biases by favoring male candidates’. This came as a result of inputting patterns collected from resumes, with the dominant gender dominance by men. Top tech companies are committed to closing the gender gap in hiring processes. The disparity is distinctly seen among technical staff such as software developers positions dominated by men, while at the same time women are dominating in non-technical roles. (source: Reuters)

Bias in AI is rarely obvious and occurs when results cannot be recognized and generalized. Some of the concerns are that bias isn’t deliberate or that could be unavoidable regarding the way that measurements are made. But it just means that we must work more precisely on estimating an error (setting the confidence intervals) around data points that are in charge of interpreting the final results. Internal adaptations of AI involve mathematical pattern recognition, which leads to all of this math must be reduced to Yes or No (your normal heart rate or no, your fingertip or no, etc).

Few biased sources are common and well known for their adverse impact on machine learning models. Some of them are recognized in the collected data and others in the methods used to sample, filter, aggregate, and enhance that data.

- Biases in samples– Sampling bias happens when data is collected in a way that is oversampled from one community but insufficiently investigated and used for another. The result is a model that is represented by a particular characteristic, which causes damage or biased data output. The ideal sample should be completely random/made from scratches or match the characteristics of the target population.

- Biases in measurements– Measurement biases, which are formed through results of inaccurate measuring or recordings of the data that has been selected.

- Confirmation biases– Biased manner of validating only information that confirms personal assumptions rather than suggested data.

- Observer biases– Similar to confirmation biases, there is a bad manner of skipping some parts of the data in any kind of data processing. The result is damaged data and invaluable data output.

- Exclusion biases– Biases generated through improper removing data from its source, even during duplicates removing processes.

In order to avoid biases in AI-supported processes, you can adopt some of the practices listed below

- Algorithmic hygiene as imperative – That means doing as much as possible checking on the subject in order to make valuable training data. That can be done through identifying the target audience for application and tailoring training data to that target or training multiple versions of the algorithm based on classification, and if few models gave the same output we can consider data as valuable for training

- Identifying potential bias sources – The data examination process should include observation of data to find redundant information from collected data before including it in the machine learning process.

- Multiple datasets differences– Process of inputting multiple datasets to the AI simultaneously and training it to learn reinforcement between datasets and generalize it for each dataset.

- Specified tuning– If cleaning datasets from un/conscious assumptions on race, gender, or other ideological concepts are on set, there is a huge chance to build a stable AI system that outputs data-driven and unbiased decisions. Removing classes (such as gender, race, religion) from the data and deleting the labels that make the algorithm biased can worsen up final output because it can affect understanding of the model, and be confusing if compared to similar tasks from the past.

- Review and monitor models processing- Skipping the gaps in machine learning performances during training and in real-world operating circumstances.

Developing AI-based systems is on-demand worldwide, and avoiding biases in Machine Learning datasets becoming an unavoidable part of multiple processes and AI data-driven businesses. Most famous companies recognized that trend and urgently made developing and implementing AI unbiased structures in their system an imperative. Some of the most famous tools used for avoiding biases, or just reducing the clutter in datasets are listed below

Tools for reducing and avoiding bias:

- IBM Watson OpenScale– Performing bias checking in a real-time manner.

- IBM AirFairness– Open-source library for detecting and mitigating biases in unsupervised learning algorithms, designed for binary classification problems.

- Tensorflow by Google – Used for testing the performance in hypothetical situations, analyzing the essentiality of different data features, and visualizing model behavior across multiple models and subsets for different Machine Learning metrics and outputs

- FATE by Microsoft (fairness, accountability, transparency, ethics) research group investigating artificial intelligence, machine learning, and natural language processing social implications

How Tasq.ai Solves the AI Bias Issues Through Diversity?

Tasq.ai developed the most comprehensive global distributed network of Tasqers that can be deployed immediately to create high quality unbiased datasets for your Machine Learning initiatives. We empower the most advanced data science teams to outsource their data labeling jobs to a diverse global crowd thus eliminating the biases compared to local BPOs hired. The Tasq.ai platform also enables data scientists to divide tasks per country/language/culture as requested and to distribute the data load per request instantly without any hassle, thus encouraging the development of Unbiased AI systems.